Big Data Engineer Resume Guide

Big Data Engineers create, maintain and optimize the data pipeline architecture to ensure efficient access of large datasets. They design, develop and implement big data solutions for processing vast amounts of structured and unstructured information in order to gain actionable insights from it.

You have the skills to crunch huge amounts of data and glean insights from it. But potential employers don’t know how talented you are unless you write a resume that stands out among other applicants. Show them what they’re missing with an impressive big data engineer resume!

This guide will walk you through the entire process of creating a top-notch resume. We first show you a complete example and then break down what each resume section should look like.

Table of Contents

The guide is divided into sections for your convenience. You can read it from beginning to end or use the table of contents below to jump to a specific part.

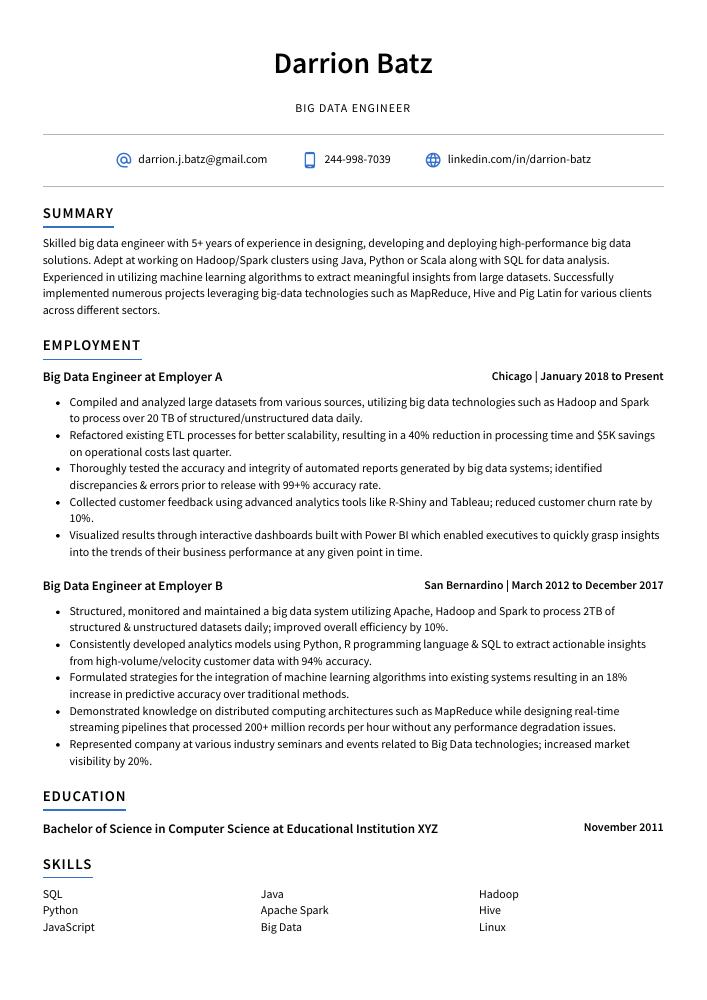

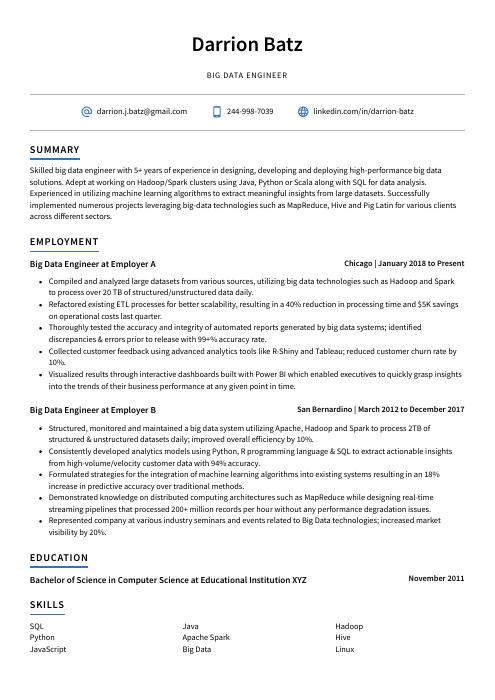

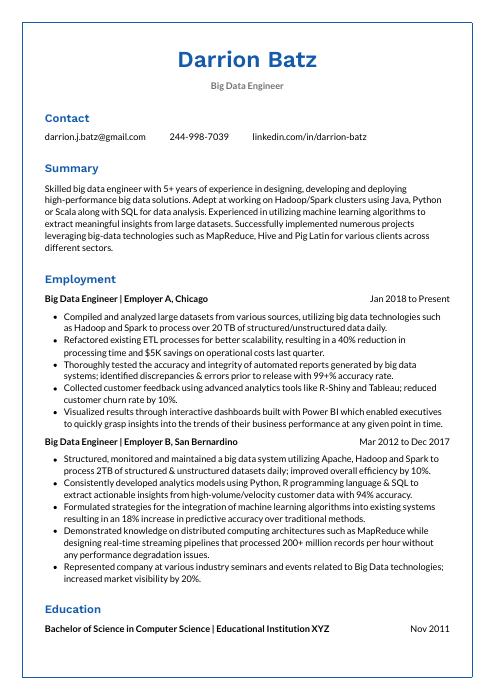

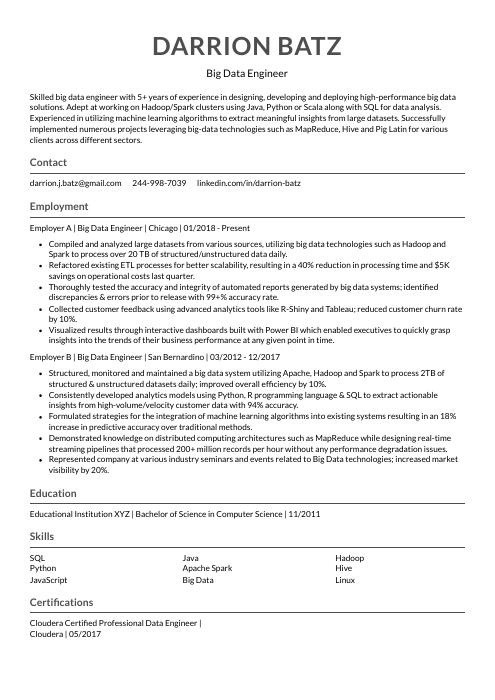

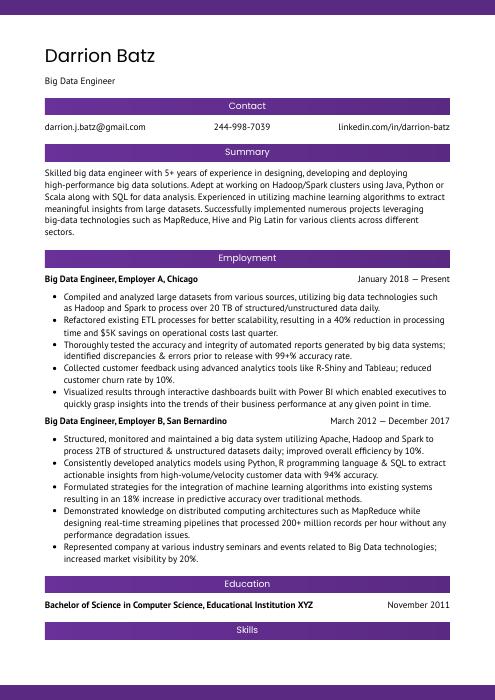

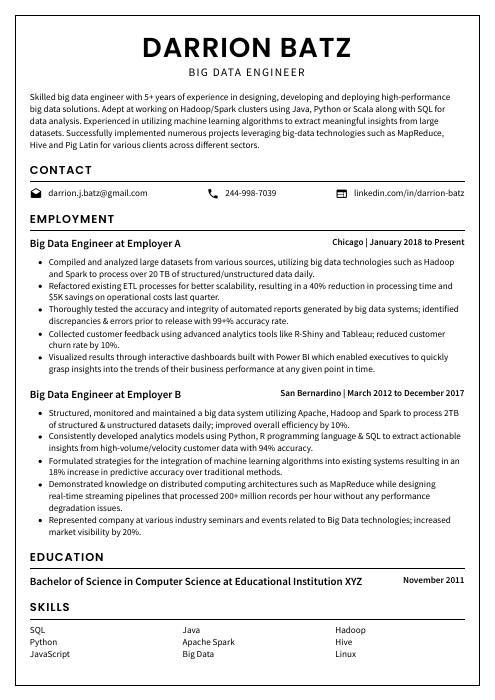

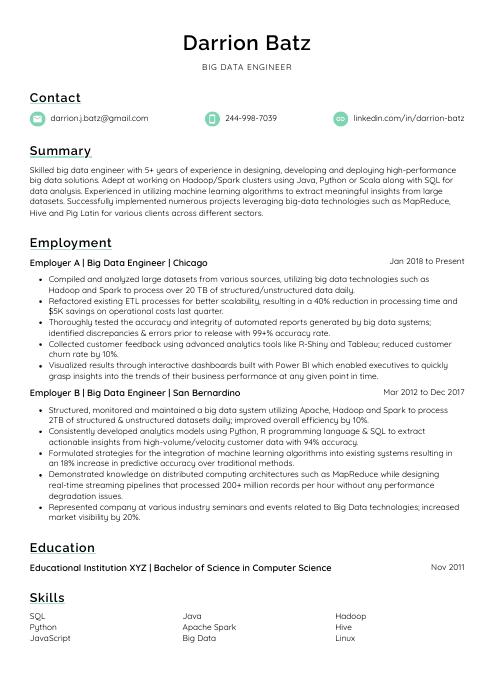

Big Data Engineer Resume Sample

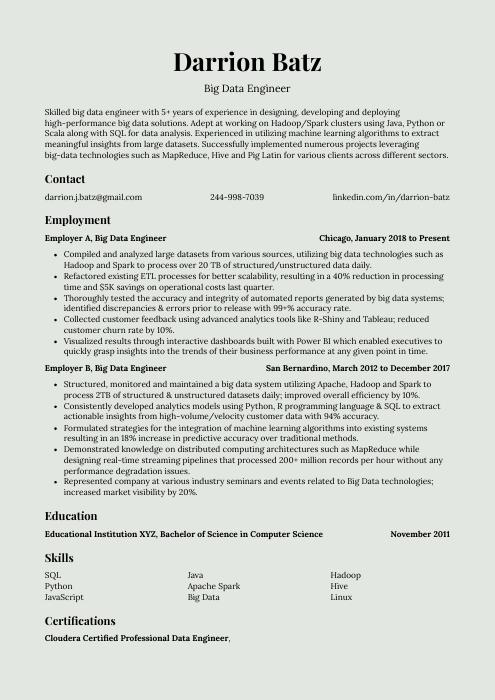

Darrion Batz

Big Data Engineer

darrion.j.batz@gmail.com

244-998-7039

linkedin.com/in/darrion-batz

Summary

Skilled big data engineer with 5+ years of experience in designing, developing and deploying high-performance big data solutions. Adept at working on Hadoop/Spark clusters using Java, Python or Scala along with SQL for data analysis. Experienced in utilizing machine learning algorithms to extract meaningful insights from large datasets. Successfully implemented numerous projects leveraging big-data technologies such as MapReduce, Hive and Pig Latin for various clients across different sectors.

Experience

Big Data Engineer, Employer A

Chicago, Jan 2018 – Present

- Compiled and analyzed large datasets from various sources, utilizing big data technologies such as Hadoop and Spark to process over 20 TB of structured/unstructured data daily.

- Refactored existing ETL processes for better scalability, resulting in a 40% reduction in processing time and $5K savings on operational costs last quarter.

- Thoroughly tested the accuracy and integrity of automated reports generated by big data systems; identified discrepancies & errors prior to release with 99+% accuracy rate.

- Collected customer feedback using advanced analytics tools like R-Shiny and Tableau; reduced customer churn rate by 10%.

- Visualized results through interactive dashboards built with Power BI which enabled executives to quickly grasp insights into the trends of their business performance at any given point in time.

Big Data Engineer, Employer B

San Bernardino, Mar 2012 – Dec 2017

- Structured, monitored and maintained a big data system utilizing Apache, Hadoop and Spark to process 2TB of structured & unstructured datasets daily; improved overall efficiency by 10%.

- Consistently developed analytics models using Python, R programming language & SQL to extract actionable insights from high-volume/velocity customer data with 94% accuracy.

- Formulated strategies for the integration of machine learning algorithms into existing systems resulting in an 18% increase in predictive accuracy over traditional methods.

- Demonstrated knowledge on distributed computing architectures such as MapReduce while designing real-time streaming pipelines that processed 200+ million records per hour without any performance degradation issues.

- Represented company at various industry seminars and events related to Big Data technologies; increased market visibility by 20%.

Skills

- SQL

- Java

- Hadoop

- Python

- Apache Spark

- Hive

- JavaScript

- Big Data

- Linux

Education

Bachelor of Science in Computer Science

Educational Institution XYZ

Nov 2011

Certifications

Cloudera Certified Professional Data Engineer

Cloudera

May 2017

1. Summary / Objective

Your resume summary is the first impression you make on a potential employer. As such, it should be concise and compelling – highlighting your experience as a big data engineer and what sets you apart from other applicants. For example, you could mention the programming languages and frameworks that are most familiar to you, any certifications or degrees related to big data engineering that have been obtained recently, and how your work has helped organizations optimize their operations in terms of cost savings or efficiency gains.

Below are some resume summary examples:

Energetic Big Data engineer with 10 years of experience in developing and managing Big Data solutions. Skilled at designing, building, testing and supporting Hadoop-based distributed systems. Experienced in creating data pipelines to process data from multiple sources for analysis by leveraging AWS cloud technologies such as S3, EMR, Lambda functions & EC2 instances. Adept at using various analytical tools like Apache Spark and Presto to analyze large datasets quickly and accurately.

Well-rounded Big Data Engineer with 5+ years of experience developing and maintaining big data solutions. Experienced working in both AWS and Hadoop ecosystems, creating high-performance systems for managing large datasets. Skilled at using various programming languages to create efficient ETL pipelines that automate data ingestion, transformation, storage, and analysis processes. Proven track record of success leading projects from concept to delivery while meeting tight deadlines.

Professional Big Data Engineer with 5+ years of experience in developing and managing data pipelines, designing cloud architectures, and creating reliable analytics models. Skilled at optimizing ETL processes, automating tasks to improve scalability, and leveraging machine learning algorithms for efficient analysis. Seeking to join ABC Tech as a Big Data Engineer to utilize my expertise in constructing robust big data solutions that will drive business success.

Driven Big Data engineer with 8+ years of experience developing and deploying solutions to process large-scale data sets across distributed systems. Proven track record of building robust databases, designing efficient ETL pipelines, and creating meaningful insights from complex datasets. Aiming to leverage strong technical skillset and knowledge in machine learning models to join ABC Tech’s Big Data team as a senior engineer.

Enthusiastic big data engineer with 5+ years of experience in building and maintaining distributed databases, developing ETL pipelines, and creating data-driven applications. Skilled in leveraging Hadoop, Spark, Hive/PigQL as well as Java to design solutions that maximize business value from big datasets. Aiming to join ABC Tech to create resilient architectures for processing large amounts of data quickly and accurately.

Dependable big data engineer with 5+ years of experience in designing, developing and deploying efficient big data solutions. Skilled at working on a variety of platforms such as Hadoop, Apache Spark and Kafka to create complex architectures that enable organizations to make use of their massive datasets. Experienced in integrating analytics into the existing IT infrastructure for improved performance and scalability.

Diligent Big Data Engineer with 5+ years of experience designing and developing data pipelines for enterprise-level systems. Expert in leveraging latest technologies, such as Hadoop, Apache Spark and Kafka to create efficient extract transform load (ETL) processes. Experienced in leveraging big data analytics techniques to generate meaningful insights from large datasets for business intelligence purposes.

Reliable Big Data Engineer with 5+ years of experience deploying and managing distributed computing systems. Expert in designing, developing, testing and optimizing large-scale data pipelines to ingest structured and unstructured data from multiple sources. Seeking to join ABC Tech to build reliable big data solutions that drive business insights for the organization’s network of customers.

2. Experience / Employment

In the experience section, you should list your employment history in reverse chronological order. This means that the most recent job is listed first.

When writing out what you did at each role, use bullet points to make it easier for the reader to digest and understand quickly. When providing details about what you did, be sure to include quantifiable results if possible; this will help demonstrate how successful your work was.

For example, instead of saying “Developed big data solutions,” say something like “Designed a scalable cloud-based platform for real-time analytics using Apache Spark and AWS EC2 instances which increased processing speed by 40%.”

To write effective bullet points, begin with a strong verb or adverb. Industry specific verbs to use are:

- Analyzed

- Optimized

- Automated

- Visualized

- Implemented

- Monitored

- Collected

- Processed

- Structured

- Developed

- Deployed

- Refactored

- Tested

- Debugged

- Secured

Other general verbs you can use are:

- Achieved

- Advised

- Assessed

- Compiled

- Coordinated

- Demonstrated

- Expedited

- Facilitated

- Formulated

- Improved

- Introduced

- Mentored

- Participated

- Prepared

- Presented

- Reduced

- Reorganized

- Represented

- Revised

- Spearheaded

- Streamlined

- Utilized

Below are some example bullet points:

- Improved processing speed of large datasets by 25% through the implementation of big data engineering solutions such as Hadoop and Apache Spark.

- Utilized advanced analytical techniques to identify actionable insights from millions of records in structured, semi-structured and unstructured datasets for clients across various industries.

- Achieved 80% accuracy on predictive models built using machine learning algorithms to forecast customer behavior and trends in the market place with high precision and recall rate.

- Reduced costs associated with storing large volumes of data by 30%, while simultaneously increasing retrieval time speeds when accessing information stored on distributed databases like Cassandra or MongoDB.

- Meticulously troubleshooted complex network issues related to scalability, latency, throughput performance optimization & security vulnerabilities throughout entire software development lifecycle (SDLC).

- Revised and improved the existing data pipeline architecture to process over 20 million records per day, resulting in a 70% increase in processing speed.

- Debugged and resolved technical issues related to software development for big data solutions within an Agile environment; decreased bug resolution time by 50%.

- Prepared detailed reports through querying large datasets using HiveQL & Apache Spark SQL; reduced query execution times from 4 hours to 10 minutes on average.

- Optimized enterprise-level Hadoop clusters for scaling up analytics queries and improving performance; achieved 25x faster response rates than before optimization efforts were implemented.

- Proficiently developed ETL pipelines with Python & PySpark that integrated Big Data sources into robust architectures, enabling high-speed analysis of structured/unstructured datasets with 100% accuracy rate across all projects undertaken so far.

- Developed and implemented big data solutions for 3+ enterprise-level organizations, increasing productivity by 30% and generating annual savings of $2.5M+.

- Reorganized existing datasets to improve accuracy and usability; achieved 50% reduction in query response times across all databases.

- Actively maintained Hadoop clusters, deploying new nodes as needed while monitoring performance metrics such as throughput, latency & storage capacity utilization rates on a daily basis.

- Secured sensitive customer information through encryption techniques and optimized the use of cloud computing services like AWS to reduce costs associated with hosting resources by 20%.

- Monitored ETL processes using tools such Apache Sqoop & Pig Latin for data ingestion into HDFS/Hive systems; improved quality assurance checks thereby reducing errors from 5% to 1%.

- Implemented Big Data technologies and analytics tools to process over 500 million records of data per day, driving a 10% increase in operational efficiency.

- Introduced cutting-edge AI algorithms for predictive analysis that improved customer segmentation results by 25%.

- Efficiently managed large datasets using distributed computing platforms such as Hadoop HDFS, Apache Spark & Cassandra; decreased query response time from minutes to milliseconds on average.

- Coordinated cross-functional teams of developers and analysts to develop scalable Big Data architectures with high availability requirements; achieved 99% uptime consistently across all environments within the last year.

- Assessed complex business problems through analyzing historical trends & patterns hidden inside vast volumes of unstructured data; identified potential opportunities worth $15M in revenue for company expansion into new markets.

- Effectively analyzed and interpreted large datasets to identify complex patterns, trends and correlations that enabled the organization to develop innovative solutions with high accuracy rates of over 95%.

- Spearheaded the development of a comprehensive big data architecture integrating several sources into an online platform; oversaw successful deployment within two months ahead of schedule.

- Mentored junior engineers in designing efficient algorithms for ingesting streaming data from multiple sources, resulting in improved performance by up to 40% on key metrics.

- Deployed Apache Hadoop clusters across various cloud platforms (AWS, Azure & GCP) while ensuring adherence to security protocols; achieved cost savings of $30,000 per quarter through optimized usage models and resource allocation strategies.

- Developed automated scripts using R programming language that increased analysis processing speed by 20%, enabling faster decision-making and generating more timely insights from business critical data sets.

- Advised senior executives on big data strategies, leading to a 40% improvement in the company’s overall efficiency.

- Confidently managed large-scale projects related to database architecture and mining of structured/unstructured datasets; successfully implemented new algorithms that improved operational performance by 25%.

- Expedited project completion times by designing automated scripts for data cleansing & transformation processes, resulting in faster ETL cycles and reduced manual effort by 60%.

- Streamlined network infrastructure design through efficient Hadoop clusters utilization; enabled cost savings worth $50K+ per annum over traditional platforms such as Oracle & SQL Server databases.

- Presented innovative solutions at industry conferences which resulted in increased exposure of the firm’s technical capabilities within the Big Data space; drove an additional 5 million visitors to website every month from targeted markets around the world.

- Processed over 1TB of data daily, utilizing big data technologies such as Hadoop and Spark to design efficient ETL pipelines for the purpose of analysis.

- Participated in cross-functional meetings with stakeholders from various departments to develop requirements for advanced analytics projects; successfully implemented 3+ real-time predictive models resulting in a 25% improvement in accuracy.

- Facilitated training sessions on big data techniques and toolsets such as Python, Hive, Pig & Scala across 10+ team members while providing ongoing support during project development cycles.

- Successfully designed distributed systems architecture focused towards supporting the processing needs of streaming applications within given time constraints; reduced latency by 40%.

- Tested code quality prior to deployment using automated methods including unit testing frameworks (JUnit)and integration tests (Selenium); achieved 99% success rate in all test cases completed so far.

3. Skills

Even though two organizations are hiring for the same role, the skillset they want an ideal candidate to possess could differ significantly. For instance, one may be on the lookout for an individual with expertise in Hadoop while another may prefer someone with knowledge of Apache Spark.

The skills section of your resume should be tailored to the job you are applying for, as this is what applicant tracking systems will scan for when evaluating resumes. Therefore, it’s important to include specific keywords that match the job description and highlight any relevant experience or qualifications you possess.

In addition to listing your skills here, make sure to elaborate on them further in other sections such as the summary or work history section.

Below is a list of common skills & terms:

- ASP.NET

- Agile Methodologies

- Algorithms

- Amazon Web Services

- Apache Kafka

- Apache Pig

- Apache Spark

- Big Data

- Big Data Analytics

- Business Analysis

- Business Intelligence

- C

- C#

- C++

- CSS

- Cascading Style Sheets

- Cassandra

- Cloud Computing

- Core Java

- DB2

- Data Analysis

- Data Engineering

- Data Migration

- Data Mining

- Data Modeling

- Data Science

- Data Warehousing

- Database Design

- Databases

- ETL

- Eclipse

- Elasticsearch

- Git

- HBase

- HTML

- HTML5

- Hadoop

- Hibernate

- Hive

- Informatica

- JSON

- JSP

- Java

- Java Enterprise Edition

- JavaScript

- Jenkins

- Linux

- MATLAB

- Machine Learning

- MapReduce

- Maven

- Microsoft SQL Server

- MongoDB

- MySQL

- NoSQL

- Oracle

- PHP

- PL/SQL

- Performance Tuning

- PostgreSQL

- Programming

- Python

- R

- Requirements Analysis

- SDLC

- SQL

- Scala

- Scrum

- Shell Scripting

- Software Development

- Software Development Life Cycle

- Software Project Management

- Solution Architecture

- Spark

- Spring Framework

- Sqoop

- Statistics

- Tableau

- Teamwork

- Teradata

- Testing

- Unix

- Unix Shell Scripting

- Web Development

- Web Services

- Windows

- XML

- jQuery

4. Education

Mentioning your education on your resume will depend on how far along you are in your career. If you just graduated and have no work experience, include an education section below your resume objective. However, if you have significant work experience to showcase, omitting the education section is perfectly acceptable.

If including an education section is necessary for the big data engineer role, try to mention courses or subjects related to engineering that demonstrate knowledge of software development principles and techniques as well as database management systems and programming languages like Python or Java.

Bachelor of Science in Computer Science

Educational Institution XYZ

Nov 2011

5. Certifications

Certifications are a great way to show potential employers that you have the skills and knowledge necessary for the job. They demonstrate your commitment to professional development, as well as prove that you are proficient in certain areas.

If you have any certifications relevant to the position, make sure they are included on your resume so hiring managers can easily see them. This will help give them an idea of what kind of value you could bring to their organization if hired.

Cloudera Certified Professional Data Engineer

Cloudera

May 2017

6. Contact Info

Your name should be the first thing a reader sees when viewing your resume, so ensure its positioning is prominent. Your phone number should be written in the most commonly used format in your country/city/state, and your email address should be professional.

You can also choose to include a link to your LinkedIn profile, personal website, or other online platforms relevant to your industry.

Finally, name your resume file appropriately to help hiring managers; for Darrion Batz, this would be Darrion-Batz-resume.pdf or Darrion-Batz-resume.docx.

7. Cover Letter

Attaching a cover letter to your job application is an essential part of the recruitment process. It should be composed of 2 to 4 paragraphs and provide more detail about who you are, what makes you a great fit for the role and why you’re interested in it.

Writing a cover letter can help set yourself apart from other applicants by providing insight into who you are beyond just the facts listed on your resume. This gives employers a better understanding of how well-suited you’d be for their open position.

Below is an example cover letter:

Dear Spencer,

I am writing to apply for the position of Big Data Engineer at XYZ Corporation. As a big data engineer with 5+ years of experience working with large data sets, I am confident that I can make a significant contribution to your organization.

In my current role as a big data engineer at ABC Corporation, I work with a team of engineers to design and implement big data solutions for our clients. We have used Hadoop, Spark, and Hive to process and analyze data sets ranging in size from 1 TB to 100 TB. In addition, we have developed custom MapReduce programs to process data that does not fit neatly into one of the standard formats.

I am confident that I can bring this same level of expertise to XYZ Corporation. In addition, I have experience leading teams of engineers and providing training on big data technologies. I believe that my skills and experience will be an asset to your organization and look forward to discussing how I can contribute to your team’s success.

Sincerely,

Darrion

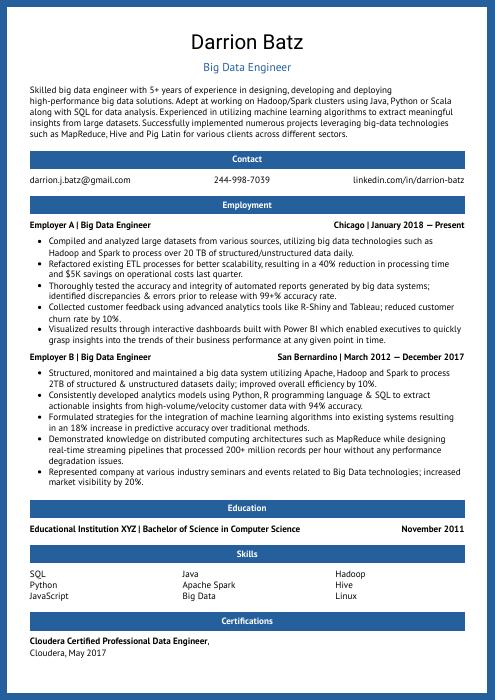

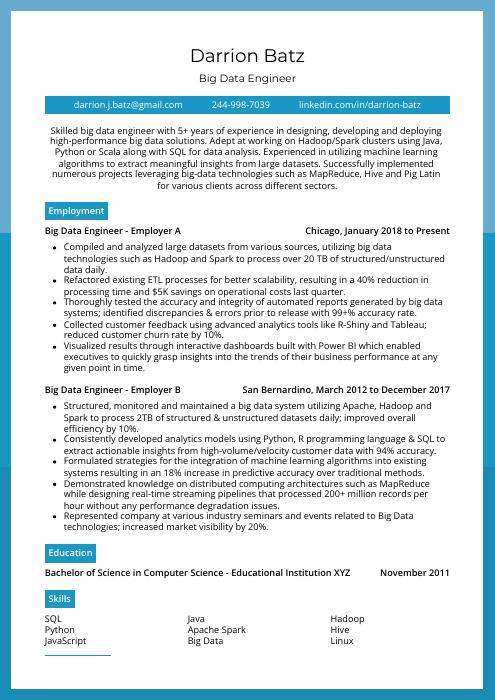

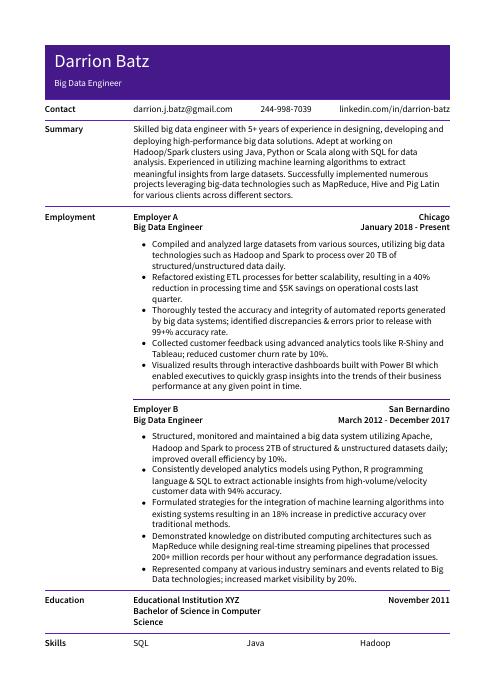

Big Data Engineer Resume Templates

Ocelot

Ocelot Rhea

Rhea Pika

Pika Saola

Saola Fossa

Fossa Dugong

Dugong Hoopoe

Hoopoe Axolotl

Axolotl Markhor

Markhor Gharial

Gharial Quokka

Quokka Indri

Indri Jerboa

Jerboa Kinkajou

Kinkajou Bonobo

Bonobo Cormorant

Cormorant Lorikeet

Lorikeet Numbat

Numbat Echidna

Echidna Rezjumei

Rezjumei